Abstract

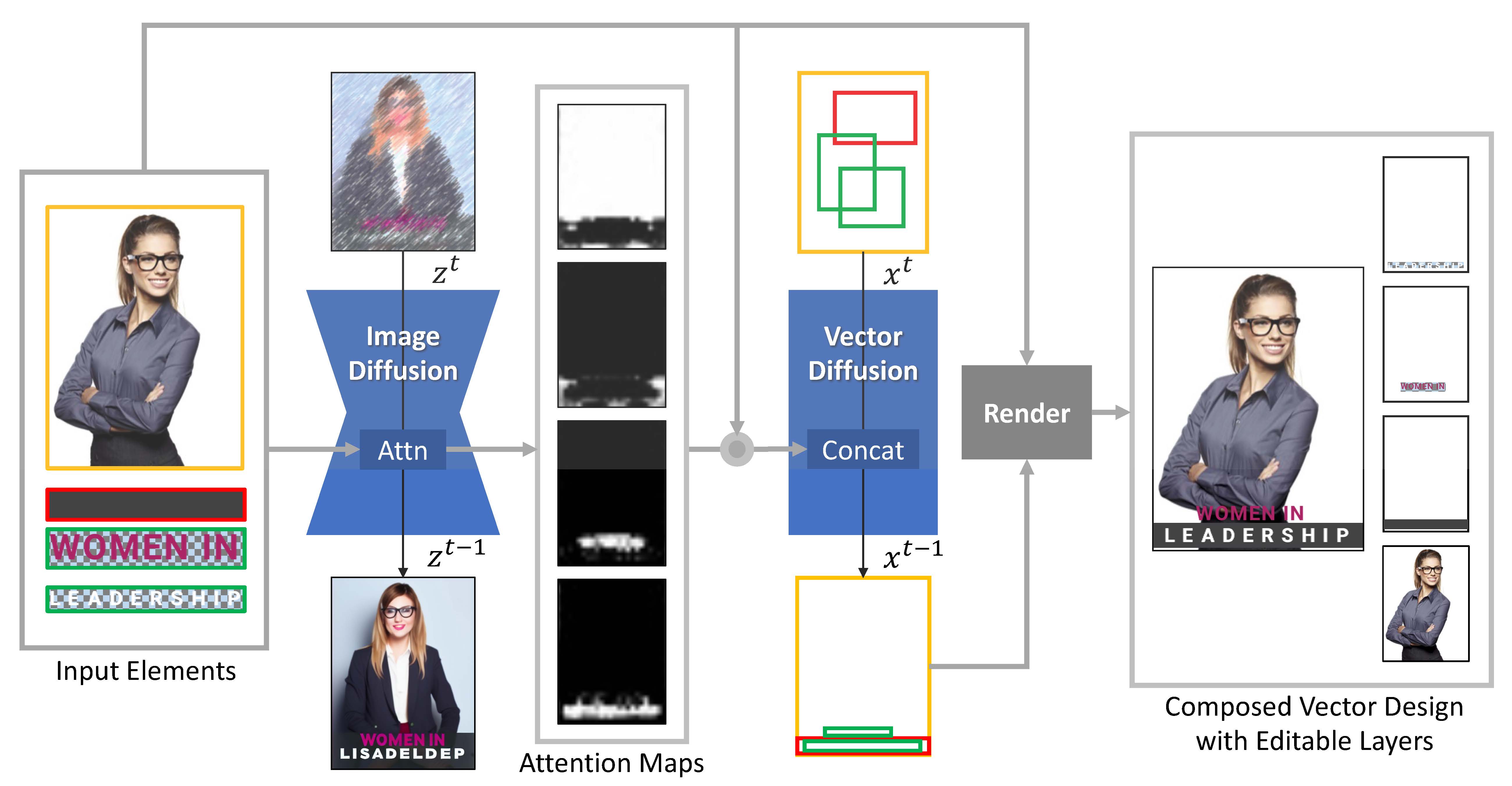

This paper proposes an image-vector dual diffusion model for generative layout design. Distinct from prior efforts that mostly ignores visual information of elements and the whole canvas, our approach integrates the power of a pre-trained large image diffusion model to guide layout composition in a vector diffusion model by providing enhanced salient region understanding and high-level interelement relationship reasoning.

Code and Data

Coming soon!Citation

@InProceedings{Shabani_2024_CVPR,

author = {Shabani, Mohammad Amin and Wang, Zhaowen and Liu, Difan and Zhao, Nanxuan and Yang, Jimei and Furukawa, Yasutaka},

title = {Visual Layout Composer: Image-Vector Dual Diffusion Model for Design Layout Generation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

}